Project Title and Overview

Title:

Neural Style Transfer using VGG19 and PyTorch

Overview:

This project implements Neural Style Transfer based on the paper by Gatys et al., where the goal is to synthesize a new image that blends the content of one image with the style of another. The model uses a pre-trained VGG19 neural network to extract content and style features and optimizes the content image to minimize the style and content loss, effectively transferring the style of one image onto the content of another.

Project Objective

Objective:

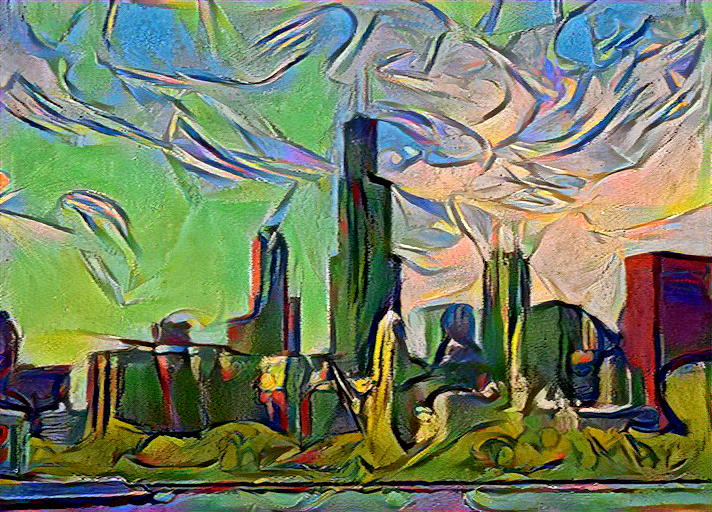

The goal is to synthesize an image that incorporates the content of one image and the style of another using neural networks. This can be applied in art, photography, and various design tasks, where the visual style of one work can be transferred to another.

Key Use Cases:

- Artistic Image Generation: Automatically create images in the style of famous artworks.

- Content Creation: Assist artists and designers in generating unique visual content by blending styles.

Technology Stack

Libraries:

- PyTorch: To define and train the model.

- Torchvision: To pre-process images and load the VGG19 model.

- Matplotlib: For visualizing the generated images and loss variation.

- PIL: For image loading and resizing.

Neural Style Transfer Process

VGG19 Model:

A pre-trained VGG19 network is used to extract features from both the content and style images. The network computes losses at specific layers, optimizing the content image to match the style of the style image.

Key VGG19 Layers for Style and Content:

- Style Layers: The style of an image is represented using layers 0, 5, 10, 19, and 28 of the VGG19 model.

- Content Layers: The content of the image is represented using layer 21.

These layers are chosen because they capture different levels of abstraction. Earlier layers focus on textures, while deeper layers capture high-level features like shape

# Layers for style and content

style_layers = [0, 5, 10, 19, 28] # Convolutional layers for style representation

content_layers = [21] # Deeper convolutional layer for content representation

Gram Matrix:

The Gram Matrix is used to capture the style information from the style image. It represents the correlation between different feature maps, which helps retain the artistic texture.

# Defining the Gram Matrix

class GramMatrix(nn.Module):

def forward(self, input):

b, c, h, w = input.size()

feat = input.view(b, c, h * w)

G = feat @ feat.transpose(1, 2) # Inner product with transpose

G.div_(h * w)

return G

Content and Style Loss Functions

Content Loss:

The content loss is calculated by comparing the mean squared error (MSE) between the content image features and the generated image features.

Style Loss:

The style loss compares the Gram Matrix of the style image with that of the generated image using the MSE.

class GramMSELoss(nn.Module):

def forward(self, input, target):

return F.mse_loss(GramMatrix()(input), target)

Weighted Loss:

The total loss is a weighted combination of content and style loss, where style weights ensure that the style from the style image is effectively transferred.

style_weights = [1000/n**2 for n in [64, 128, 256, 512, 512]] # Weights for style layers

content_weights = [1] # Weight for content layer

weights = style_weights + content_weights

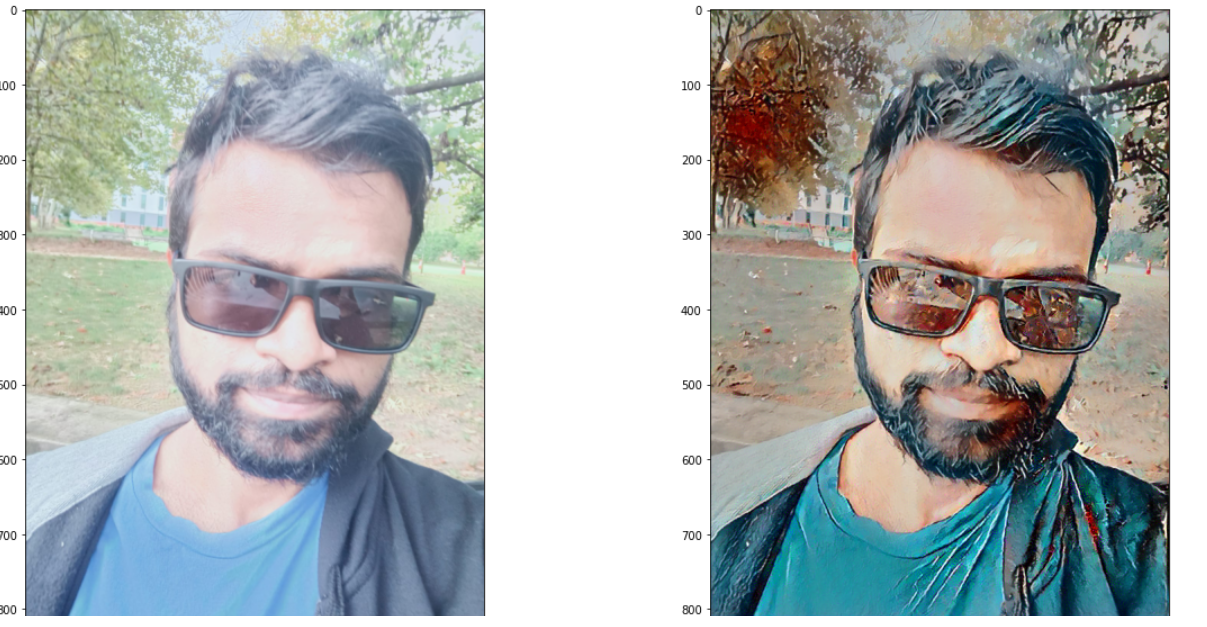

Training and Results

Training Process:

- Images: A content image and a style image are passed through the VGG19 network.

- Optimization: The model iteratively updates the generated image to minimize the style and content losses.

- Result: The synthesized image blends the content from the content image and the style from the style image.

# Define device and optimizer

device = 'cuda' if torch.cuda.is_available() else 'cpu'

optimizer = torch.optim.Adam([generated_image], lr=0.003)

Training and Results

Training Process:

- Input: A content image and a style image are passed through the VGG19 network to extract features.

- Optimization: The LBFGS optimizer is used, which is effective for neural style transfer due to its deterministic nature.

- Iterations: The model optimizes over 6000 iterations to minimize the content and style loss.

max_iters = 6000

optimizer = optim.LBFGS([opt_img], lr = 0.1)

Optimization Loop:

The optimization loop iteratively updates the generated image to minimize the loss, balancing content and style.

while iters < max_iters:

def closure():

optimizer.zero_grad()

out = vgg(opt_img, loss_layers)

layer_losses = [weights[a] * loss_fns[a](A, targets[a]) for a,A in enumerate(out)]

loss = sum(layer_losses)

loss.backward()

return loss

optimizer.step(closure)

Result:

The model generates a synthesized image that combines the content structure of the content image with the artistic style of the style image. Link of the project code on GitHub

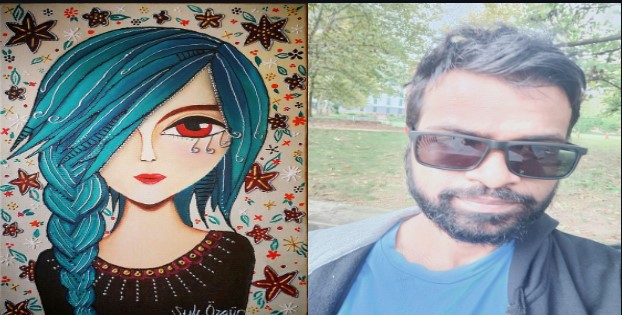

We applied style of left hand side image to the right hand side of the image

Key Challenges

Challenges Faced:

- Balancing Content and Style: Achieving the right balance between retaining content structure and applying artistic style was a key challenge.

- Loss Convergence: Ensuring the loss converges to a visually pleasing result within a reasonable number of iterations.

Achievements and Future Work

Achievements:

- Successfully implemented Neural Style Transfer using VGG19.

- Generated high-quality images that effectively transfer style and maintain content structure.

- Optimized the process using LBFGS for faster convergence.

Future Work:

- Real-time Style Transfer: Speed up the process to achieve near real-time results.

- Multiple Style Combinations: Experiment with combining multiple styles into a single image.

Conclusion

This project demonstrates the successful implementation of Neural Style Transfer using deep learning techniques, combining content and style from two distinct images. The generated images have significant potential in the fields of art and design.

Thank you for your interest!